- The US military used Anthropic's AI in the operation to capture Nicolas Maduro

- Anthropic's AI tool Claude was deployed despite usage policies against violence

- Anthropic CEO urges AI regulation due to concerns over lethal military use

The US military used artificial intelligence in its operation to capture then Venezuelan President Nicolas Maduro last month, according to a report by the Wall Street Journal. Anthropic was the first AI model developer to be used in the Pentagon for classified operations.

Military adoption is regarded as a major credibility boost for AI companies, as it helps them with legitimacy and justifies their high investor-driven valuations in a fiercely competitive landscape.

Despite Anthropic's usage guidelines prohibiting Claude from facilitating violence, developing weapons or conducting surveillance, the artificial-intelligence tool was used in the operation.

Maduro and his wife were captured from Caracas after several sites were bombed.

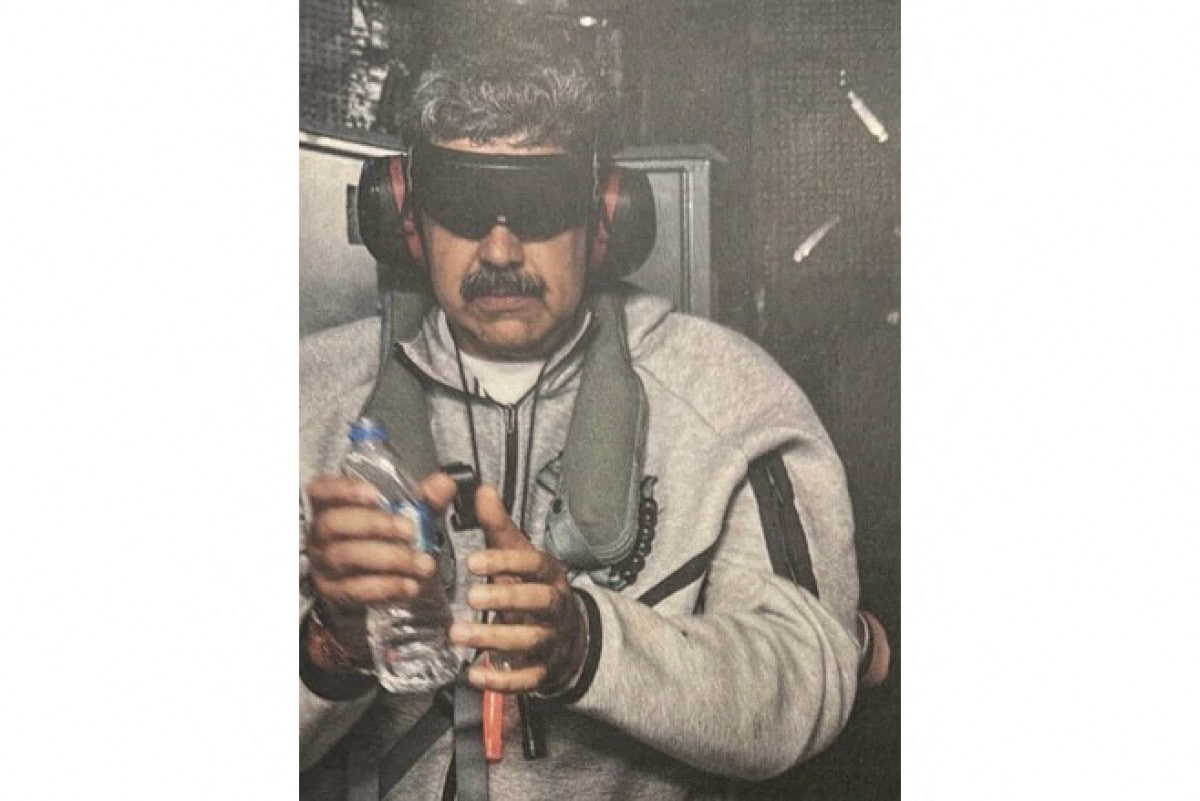

Venezuela's President Nicolas Maduro onboard the USS Iwo Jima after the US military captured him on January 3, 2026.

Photo Credit: AFP

"Any use of Claude-whether in the private sector or across government-is required to comply with our Usage Policies, which govern how Claude can be deployed. We work closely with our partners to ensure compliance", Anthropic's spokesman said.

Claude was deployed because Palantir Technologies, a data company whose tools are used by the Pentagon, has a partnership with Anthropic. Anthropic's concerns regarding the usage of Claude have made US officials consider cancelling contracts worth up to $200 million, the Wall Street Journal reported.

Anthropic Chief Executive Dario Amodei has called for greater regulation and guardrails to prevent harm from AI and has publicly expressed concerns about AI being used in autonomous lethal operations and domestic surveillance, which are two major sticking points in its current contract with the Pentagon.

Dario Amodei, co-founder and CEO of Anthropic

Photo Credit: AFP

Defence Secretary Pete Hegseth announced in January that the Pentagon would not collaborate with AI models that "won't allow you to fight wars", referring to discussions undertaken with Anthropic, the Journal reported.

Anthropic had signed the $200 million contract last summer.

Many AI companies are building custom tools for the US military, most of which are available only on unclassified networks typically used for military administration. Anthropic is the only one that is available in classified settings through third parties, but the government is still bound by the company's usage policies.

Track Latest News Live on NDTV.com and get news updates from India and around the world