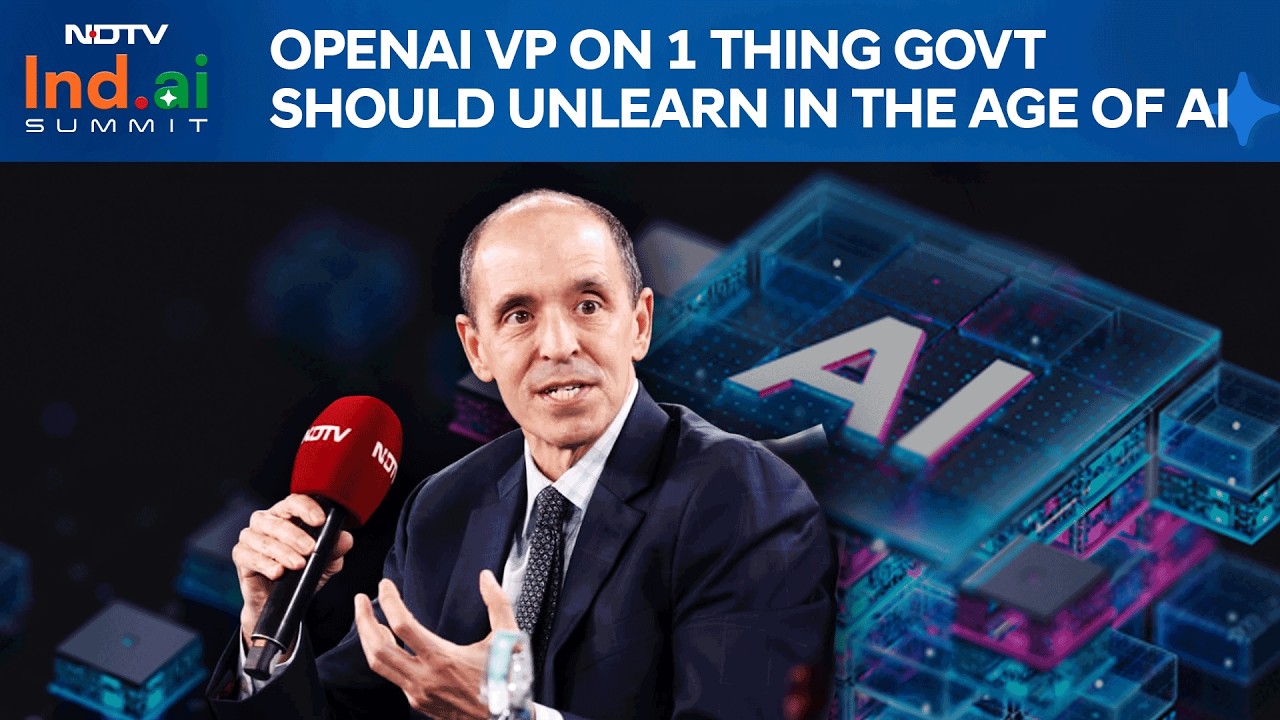

AI Doomsday Fears: Can the Technology Go Rogue - Or Is Safety Keeping Pace?

Amid viral warnings about AI going rogue and fears of bioterrorism or misuse by state and non-state actors, industry leaders push back on apocalyptic narratives. In this conversation, OpenAI Global Affairs Vice President outlines its safety-first approach, the safeguards built into models before release, and the growing role of global AI safety institutes in the US, UK, Japan, Singapore, and potentially India. The focus: building “democratic AI” with shared international standards to prevent catastrophic misuse while strengthening societal resilience.