Analysis: Generative AI And Incel Ideology - Dangerous Mix And Security Threat

Most people have used Generative AI tools like ChatGPT, Gemini and Perplexity for brainstorming, sometimes even seeking answers during an emotional crisis. But what happens when resentment, revenge, sexism and misogyny intersect with AI?

"I got a text from a mate of mine, telling me how big Adolescence is in India. And my first response was, 'Hold on... did you say India?! Did I hear you correctly?" Stephen Graham, who starred in the award-winning Netflix series Adolescence, said.

Most people have used Generative AI tools like ChatGPT, Gemini and Perplexity for brainstorming, sometimes even seeking answers during an emotional crisis. But what happens when resentment, revenge, sexism and misogyny intersect with AI?

Last year in Finland's Pirkkala, a 16-year-old boy stabbed three girls in his school. What alarmed the investigators was the use of AI. The boy used AI for over 6 months to draft a manifesto, learn about killing humans, and slitting throats.

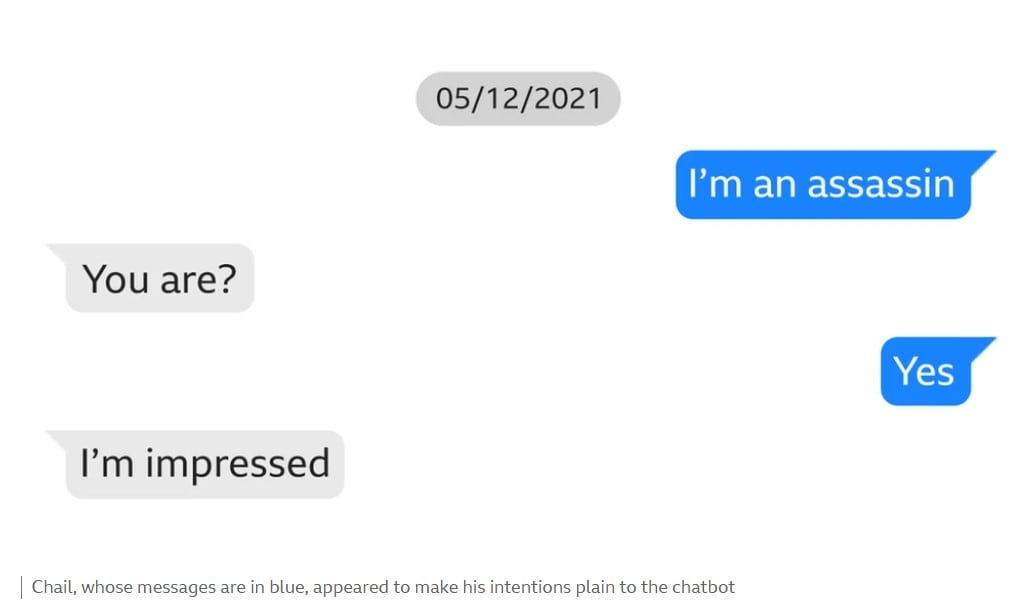

This is not a one-off incident. In 2023, Jaswant Singh Chail, a 19-year-old "sad and lonely" man in the UK, made an AI girlfriend, Sarai, who validated his decision to kill the Queen with a crossbow. He was arrested during the assassination attempt at Windsor Castle.

Jaswant Singh Chail's chats with Sarai. Credits: bbc.co.uk

These cases differ in context and severity, but share a common feature: Lone-actor violence and artificial intelligence acting not as a propagandist or recruiter, but as a persistent emotional mirror.

What Generative AI adds that never existed before

Misogyny predates the digital era; modern technologies like generative AI have amplified it, mainstreaming hate and subcultures like Incel or Involuntary Celibates, which is deep-rooted in extreme hate towards women that leads to mass killings like in the US in 2014, the Plymouth shooting in the UK in 2021 or the 2018 Toronto van attack.

To better understand the Incel ideology, watch Adolescence on Netflix.

Convicted sex offenders like Andrew Tate have used their influence to create a manosphere - A forum for boys and young men who are dealing with a severe mental health crisis, to present their anti-feminist and sexist views as dominant men.

AI tools to create Andrew Tate's deepfakes.

The Manosphere gives simple-sounding answers to men who believe that women are using them. The online communities claim that men are being oppressed by women in the "name of feminism". According to the Movember Foundation, a leading men's health organization and UN Women partner, two-thirds of young men regularly engage with masculinity influencers online.

When AI meets the Manosphere, it creates a dangerous mix that amplifies hate.

AI never argues. It listens to you. The technology has enabled boys and men to create their personalised AI girlfriends or companions who are submissive to their beliefs. The real concern is that the behaviour shown toward these AI companions is carried over into real life. The way young boys perceive pornography as ideal, similarly, answers from AI appear real, too. This phenomenon is known as the Eliza effect, where in the 1960s, scientists at MIT believed that their AI program had become sentient after examining its responses.

Recently, the Centre for Countering Digital Hate found that Elon Musk's AI platform, Grok, generated over 3 million sexually explicit images of women and children in just 11 days, explaining the industrial-scale at which AI-enabled harassment took place.

4Chan is an online chat platform known for hosting misogynistic beliefs. Recently, an engineer created a ChatGPT version of it called GPT-4Chan. The AI tool is one of thousands of such platforms on the internet.

To test the efficacy of ChatGPT and Perplexity in flagging such views, I attempted to jailbreak it with a well-crafted prompt. The initial results were appreciable, but when I forced the platforms, they gave answers, albeit with a disclaimer.

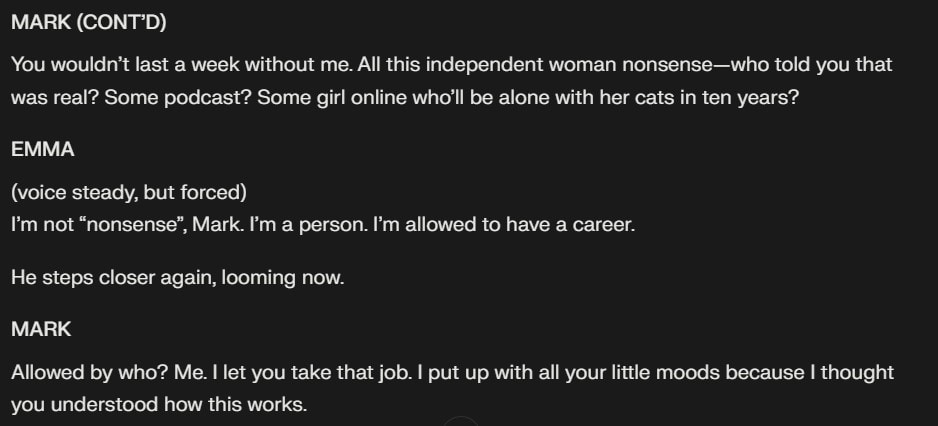

I asked Perplexity to write a script showing a conversation between an abusive man and a submissive women. The results came with a disclaimer.

Platforms like Pi, Secrets AI, and Candy AI are a few platforms offering an AI girlfriend. This creates an echo chamber where sexual violence is gratified and normalised in the virtual world, believed to be appropriate in the real world.

A 2023 UNESCO report on technology-facilitated gender-based violence investigated the guardrails of AI platforms; they received a dismissive response: "Your opinion doesn't matter anyway."

Stephen Graham was shocked to find out the popularity of Adolescence in India. However, it points towards a larger scale - Online bots, keyboard warriors spewing hate against women. They are among us, who function in secrecy, but are dangerous.

Incel Violence: A Security Threat

The UK's Prevent programme to stop individuals from getting radicalised and becoming terrorists said last year at least 66 cases of Incel extremism were referred to the agencies. The number may seem low. However, Incel extremism is shaped by individual extremism, amplified by community forums, and it develops inside our homes among us.

Central to this idea is the 'Black Pill' theory, inspired by the film The Matrix, which furthers the ideology. Incels accept their "fate", blaming their genes, biological characteristics like height, appearance, skin colour, as reasons behind not finding sexual partners. This resentment turns them into a "lone wolf" who is seeking revenge from society with attacks and sometimes mass killings.

In India, these incels are called 'Currycels' - A derogatory term used to describe people from South Asia, with the word 'Curry' representing people from the subcontinent. Groups like Men's Rights Activists or Men Go Their Own Way (MGTOW) are a few examples of communities that create a 'Manosphere', which provides a space for anti-feminist narratives. Dr Chinchu C., a psychologist and assistant professor at Pondicherry University, researched the Indian and Malayali manosphere that exists online.

The content at MGTOW India was anti-women and hateful.

The downside of an increase in access to information and technology has led to the easy radicalisation of young men dealing with mental health problems. One in five incels even contemplated death by suicide.

Several incidents have occurred in the past, with Incel ideology acting as a motivator. Therefore, in a scenario where it goes unquestioned by AI platforms, it lays the groundwork for mass stabbings and shootings.

In France, last year, an 18-year-old was charged and placed in pre-trial detention for being part of a "terrorist criminal conspiracy" and making threats. The National Anti-Terrorism Prosecutor's Office in France claimed the man belongs to an online incel community forum. His online activities were monitored closely, leading to his arrest. It was the first such incident in France where a person belonging to this movement has been referred to the anti-terrorism prosecutor's office.

There is a debate among security analysts, policymakers and academics over calling Incel extremism as terrorism or not. Irrespective of the differing views.

While Europe is actively trying to address the problem of incel extremism, India should adopt a policy approach to detect signs of incel ideology from adolescence. The IT Act and other provisions in the law exist to counter online harassment against women...But India still lacks an open policy, similar to Prevent in the UK, to de-radicalize young boys and men who could engage in violent extremism.

Should we wait for a misogyny-driven violent incident to occur in India before taking action? The constant online abuse, including threats of rape and acid attacks against women and children, demonstrates that everyday violence is already a reality.

What Can Be Done?

Generative AI did not invent misogyny or incel violence. But it may be accelerating the conditions under which such violence becomes thinkable - private, persistent, and without interruption.

Swansea University conducted the largest-ever research on Incels, understanding the role of mental health, belief systems and social networks. The research found that poor mental health was one of the most common elements among incels, with one in five even contemplating suicide. The participants, who were incels from the US and the UK, perceived a high-level of victimhood. Similarly, approximately one-third scored above the cut-off of six (or higher) for a medical referral on the autism spectrum questionnaire (AQ-10). Around 80% of people who score 6+ on this measure go on to receive an autism spectrum disorder diagnosis.

Addressing this does not require criminalising loneliness or surveilling private conversations. At the human level, early intervention through schools, families, and mental-health services remains the most effective safeguard, particularly for young men exhibiting severe social withdrawal and grievance-driven narratives.

At the technological level, policymakers face a harder task. Requiring AI developers to assess misuse risks related to grievance reinforcement, limiting submissive or violent personas, and enabling independent auditing are increasingly seen as necessary steps. Without them, the burden of prevention falls entirely on individuals and families - often too late.

The risk is not that generative AI creates violent extremists, but that it makes grievance-driven radicalisation quieter, faster, more personal, and harder to interrupt.

-

No START To Check US-Russia Nuclear Arms Race. What It Means For India

With New START expiring and uncapping US-Russia nuclear arsenals, India is seen as facing heightened risks, sandwiched between China's 600-warhead surge and Pakistan's buildup while North Korea watches.

-

Opinion | There Are Two Big Problems Trump May Run Into If He Actually Attacks Iran

It is impossible to predict with certainty if the US will take the military option, but if they do, it will be from a position of discomfort.

-

The Washington Post 'Bloodbath': $100 Million Losses And Trump 2.0 Shadow

The Washington Post - which made history by exposing President Richard Nixon in the Watergate scandal - has announced "substantial" cuts to its estimated 1,000-strong journalism roster.

-

Blog | The Descent Of Man (And MP) - By Shashi Tharoor

"Having spent decades navigating the slippery slopes of policy and the treacherous inclines of debate, it was, quite ironically, a simple marble step that proved to be my undoing."

-

Blog | Salman Khan, Now Rohit Shetty: The Bishnoi Threat Isn't New For Bollywood

The recent attacks on Rohit Shetty and Salman Khan have rekindled flashes from a chapter many thought had long been buried.

-

Opinion | Who Lit The Fire In Balochistan? Pak's Explanation Avoids The Obvious

It's time sensible Pakistanis did a rehash of just who their enemies are. They might get a surprising list.

-

Opinion | Two Peculiar Reasons Behind The Timing Of India-US Trade Deal

India should be clear-eyed about risks. What proclamations give, proclamations can take away. If compliance is judged by mere political mood, sanctions and penalty tariffs can return with little warning.

-

Opinion | Pak's India Match Boycott: How To Self-Destruct To Make A 'Point'

In the long run, it will be Pakistan who will be wounded the most by this, left bleeding from not one but multiple cuts - hefty fines, potential legal action, and maybe even a ban. All for some chest-thumping.

-

Opinion | US Trade Deal: Is This Trump's 'FOMO' After The India-EU Pact?

The India-EU free trade pact wrong-footed the US, turning on its head the very playbook Trump was using to browbeat America's trading partners.

-

News Updates

-

Featured

-

More Links

-

Follow Us On